In an age where technology is advancing at an unprecedented pace, chip technology stands at the forefront of this revolution. Microchips, the tiny yet powerful components found in almost every electronic device, are pivotal in shaping the digital landscape. From smartphones to artificial intelligence, the role of chips is integral to the performance and efficiency of modern technology. In this post, we’ll explore the evolution of chip technology, its current trends, and what the future holds.

The journey of chip technology began in the 1950s with the invention of the integrated circuit (IC). This innovation allowed multiple transistors to be embedded onto a single semiconductor substrate, drastically reducing the size and cost of electronic devices while improving performance. Early applications were primarily in computers and military equipment. However, as demand for consumer electronics grew, manufacturers began to develop smaller and more efficient chips.

The 1970s and 1980s marked a significant leap in chip technology with the introduction of microprocessors. Intel’s 4004, released in 1971, is often regarded as the first commercially available microprocessor, paving the way for personal computers. This era also saw the rise of Moore’s Law, coined by Gordon Moore, which predicted that the number of transistors on a chip would double approximately every two years, leading to exponential growth in computing power.

Today, chip technology continues to evolve rapidly, driven by several key trends:

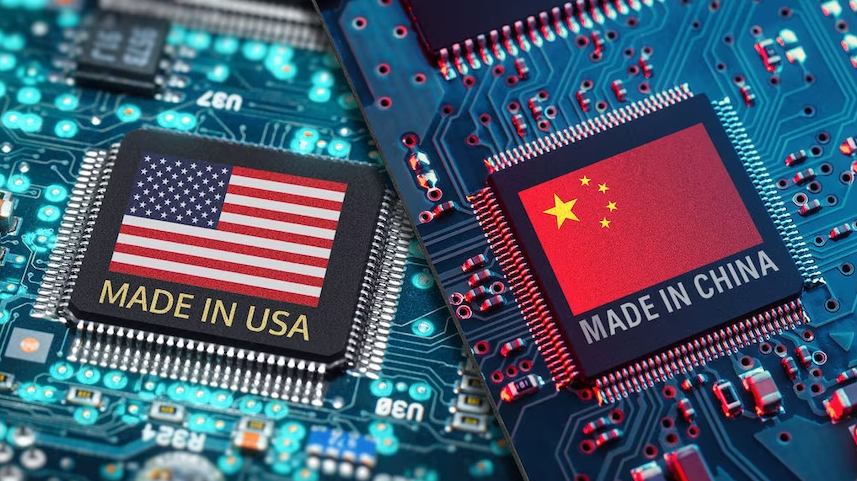

Despite the exciting advancements, the chip industry faces several challenges. The global semiconductor supply chain has been tested by geopolitical tensions, natural disasters, and the COVID-19 pandemic, leading to shortages and increased costs. Additionally, as chips become more complex, the design and manufacturing processes require significant investment and expertise. This creates barriers for new entrants and can stifle innovation.

Moreover, as we push the limits of miniaturization, physical limitations such as heat dissipation and quantum tunneling present significant hurdles. The industry must continue to innovate not only in materials and design but also in manufacturing processes to overcome these challenges.

Looking ahead, the future of chip technology appears bright yet challenging. As we move towards an increasingly digital and interconnected world, the demand for advanced chips will only grow. Innovations in areas like neuromorphic computing, which mimics the human brain’s neural architecture, could redefine how we approach problems in AI and data processing.

Additionally, as 5G technology rolls out globally, there will be a heightened need for chips that can handle higher data speeds and improved connectivity. The integration of AI capabilities into everyday devices will likely become more prevalent, requiring chips that are not only powerful but also smart.

Chip technology is the backbone of our modern digital landscape, influencing everything from communication and entertainment to healthcare and transportation. As we stand on the cusp of further advancements, the continued evolution of chip technology will be crucial in shaping the future of our society. By addressing current challenges and embracing innovation, we can ensure that chip technology will not only keep pace with demand but also lead us into a new era of technological possibilities.